The Linux Command grep with pipelines is a powerful method for filtering data from files or output results and extracting only the necessary information. In this post, we’ll explore how to use the grep command along with pipelines effectively.

Table of Contents

What is a Pipeline?

First, let’s briefly explain what a pipeline is. In Linux, a pipeline (|) is a feature that passes the output of one command as input to another command. By combining multiple commands, you can simplify complex tasks. For example, you can filter the output of the ls command with grep, or you can use it to find specific processes from the list generated by the ps command.

Basic Usage of Pipelines

Pipelines are used as follows:

command1 | command2In this case, the output of command1 is used as the input for command2. Now, let’s look at specific examples of using pipelines with grep.

How to Use grep with Pipelines

When you combine grep with pipelines, you can easily perform various data processing tasks. Below are a few representative examples of using grep with pipelines.

Filtering File Listings

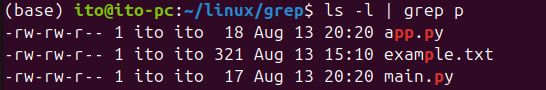

If you want to filter only files whose names contain the letter “p,” you can use a pipeline with grep.

ls -l | grep pAs shown in the image below, you can easily identify files that contain the letter “p” in their names.

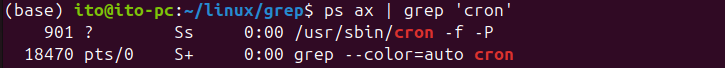

Finding Specific Processes

The ps command displays a list of currently running processes. If you want to find a specific process within this list, you can use grep with a pipeline.

ps ax | grep 'cron'This command filters and displays only the processes that contain the string ‘cron’. Here, ps ax is a command that provides a brief list of all processes, and grep 'cron' shows only the lines that include the string ‘cron’.

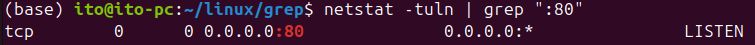

Checking Specific Network Ports

The netstat command is a tool that allows you to check the status of network connections. When you want to see only the services running on a specific port, using grep can be very convenient.

netstat -tuln | grep ':80'This command filters and displays only port 80 (which is typically used by web servers) among the currently open ports.

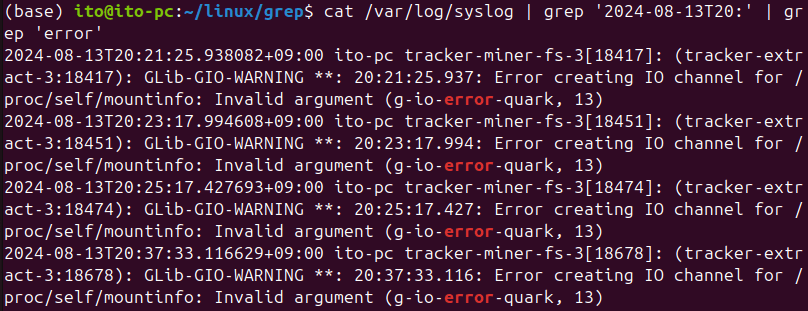

Searching Logs for Specific Timeframes

Server log files are usually very long and complex. When you want to review logs that occurred within a specific timeframe, you can use a pipeline to easily extract the data you need.

cat /var/log/syslog | grep '2024-08-13T20:' | grep 'error'This command searches the syslog file for errors (error) that occurred throughout 20:00s on August 13, 2024. Multiple grep commands are connected with pipelines to first filter logs by a specific timeframe, and then to narrow down to error messages within that period.

Tips for Using grep with Pipelines

There are a few things to keep in mind when using grep with pipelines.

Case Sensitivity

By default, grep is case-sensitive. If you want to perform a case-insensitive search, you need to use the -i option.

ps aux | grep -i 'cron'Filtering Out grep Itself

As mentioned earlier, when you use grep with commands like ps ax, the grep command itself can appear in the results. To prevent this, you can add grep -v grep to filter out the grep command from the results.

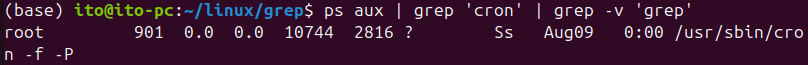

ps aux | grep 'cron' | grep -v 'grep'The figure below shows that processes using grep have been filtered out.

Caution with Regular Expressions

grep supports powerful regular expressions. However, incorrect use of regular expressions can lead to unintended results, so be careful when using them.

Summary

Using grep in combination with pipelines in Linux allows you to perform complex data searches and filtering tasks very efficiently. This combination has become an essential tool in various practical situations and is particularly useful in log analysis, process management, and network monitoring.

While it may seem a bit complicated at first, once you get the hang of it, you’ll realize how powerful and convenient it is. Explore the use of grep and pipelines in different scenarios, and you’ll significantly enhance your Linux workflow efficiency.